Trust Me: Using Multisensory Learning in Mathematics and Reading

2-MIN. READ

Find out how Liz's loss of taste and smell during the pandemic helped her teach students trust and conceptual understanding through multisensory learning.

Discover four strategies to maintain joy and perspective during high-stakes testing.

Discover creative ways to celebrate growth and demonstrate appreciation toward teachers.

Get tips, best practices, and success stories from other educators.

2-MIN. READ

Find out how Liz's loss of taste and smell during the pandemic helped her teach students trust and conceptual understanding through multisensory learning.

3-MIN. READ

Explore four key takeaways on how Curriculum Associates uses feedback to develop accessible products.

2-MIN. READ

Here are four tips on how to understand and teach mathematical instruction based on current strategies and standards.

2-MIN. READ

Find out how celebrating student growth, both big and small, fosters a love for learning in the classroom.

2-MIN. READ

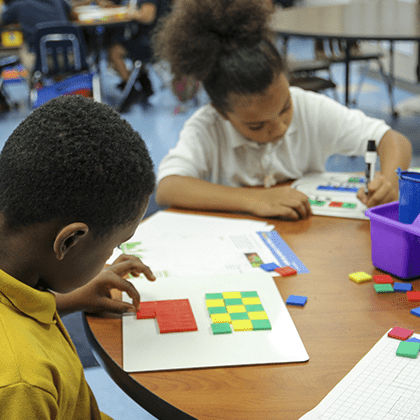

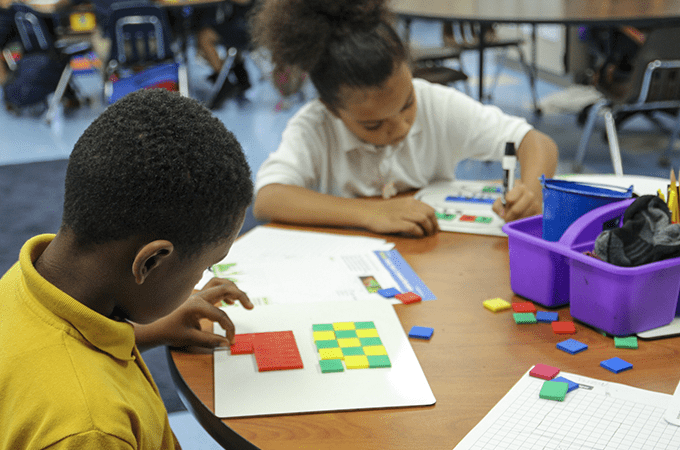

Discover why using manipulatives in math is important.

2-MIN. READ

Implement these engaging games in the classroom to make building math fluency fun.

2-MIN. READ

Teachers are essential to student growth. Discover ways to celebrate and demonstrate their enormous contributions.

2-MIN. READ

Get tips for setting up math centers in your classroom to support student growth.

2-MIN. READ

Find out how building trust and creating a safe space helps you connect with middle schoolers and enhance student learning.

2-MIN. READ

Learn four strategies to maintain a positive perspective during high-stakes testing.

2-MIN. READ

Algebra-ready students will be prepared to succeed in high-level mathematics. Learn how to determine if your students are ready.

2-MIN. READ

Acceleration focused on content and timing allows the whole class to access grade-level content.